Imagine if self-driving cars could quickly learn to recognize new road signs or if medical imaging systems could adapt to identify new abnormalities with just a few examples.

Researchers at Concordia University are making this possible. In a study published in Nature’s Scientific Reports, PhD student Amin Karimi and Professor Charalambos Poullis from the Gina Cody School of Engineering and Computer Science introduce a new method for improving how artificial intelligence (AI) recognizes images, even with very few examples to learn from.

This method, known as "few-shot semantic segmentation," allows AI to understand the contents of an image in great detail, identifying and labeling each pixel. The “few-shot” part means that the AI can learn to recognize new objects with only a few labeled examples, much like how humans can learn.

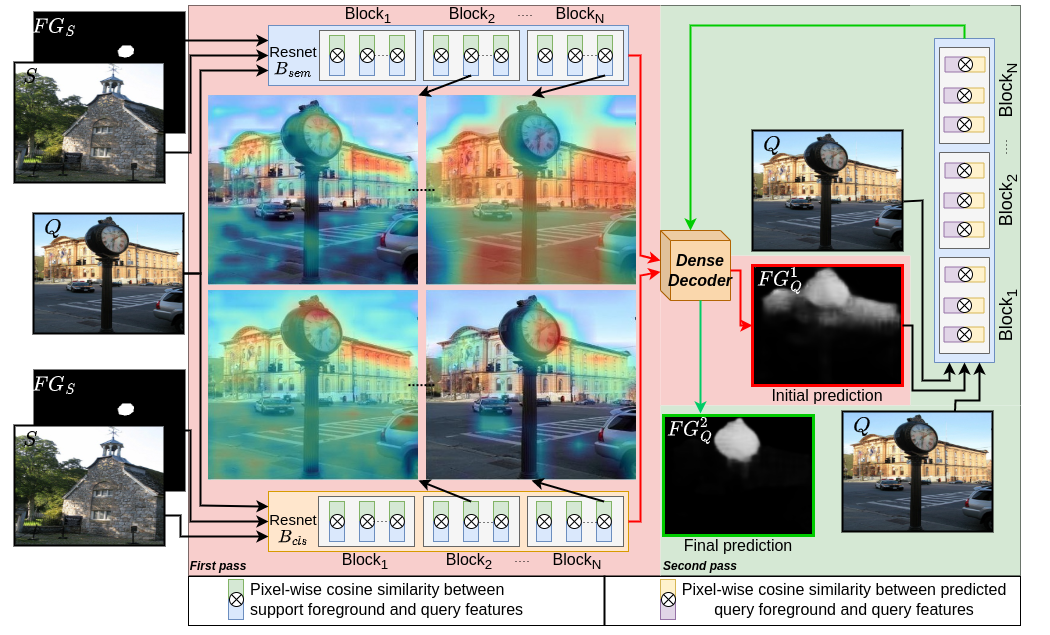

Karimi and Poullis developed a unique approach that combines information from two types of AI models: one that classifies whole images and another that breaks down images into their component parts. By integrating the strengths of these models, the researchers created a more powerful system for understanding images.

Charalambos Poullis

Charalambos Poullis

Amin Karimi

Amin Karimi