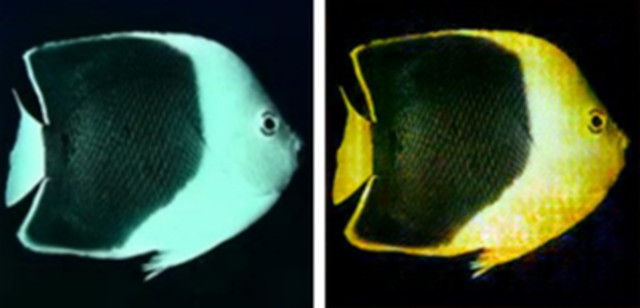

Imagine diving into the ocean and capturing stunningly clear photographs of marine life, or broadcasting underwater explorations with vivid, high-quality visuals.

A new study published in IEEE Transactions on Broadcasting introduces a novel approach to enhance underwater images.

This work was carried out by PhD student Alireza Esmaeilzehi (now a postdoctoral fellow at the University of Toronto) and visiting researcher Yang Ou (now at Chengdu University), under the supervision of Professors M. Omair Ahmad and M.N.S. Swamy, both from the Department of Electrical and Computer Engineering at the Gina Cody School of Engineering and Computer Science.

The team’s method leverages deep learning, a type of artificial intelligence that uses neural networks to mimic the human brain's ability to learn from data.

The method integrates information from various sources, including underwater medium transmission maps—which help understand how light travels through water—and atmospheric light estimation techniques—which assess lighting conditions. This combination creates a rich set of features that enhances the AI's ability to improve image quality.

M. Omair Ahmad

M. Omair Ahmad

M.N.S. Swamy

M.N.S. Swamy